Leveraging over 2 decades of experience programming in the eLearning industry – with many years of personal/practical experience including almost half a decade of intense experience in VR/AR/MR/XR using both of the top game engines (Unity and Unreal Engine), I’ve developed numerous unique applications/simulations/demonstrations specifically for the Oculus [Meta] Quest (or Hololens) headsets. Though I have quite a bit of Unreal Engine experience, I prefer Unity. I VERY much prefer Unity…

As there is so much demand right now for VR Training, I quickly put together a demo in just a couple days to show what can be done in a very short time. After viewing some samples of what is being done for the medical industry, I decided on a Tuesday morning to create this project – it is now Wednesday early afternoon and I’m posting a video of what can be done in less than 2 days.

There are many choices to make when starting a virtual reality project. For this demo, I chose to not use one of the polished framework plugins (one great example: VR Interaction Framework on the Unity Asset Store). Instead I started from scratch, bare-bones, with the latest Oculus SDK (v39). I plan to use it to experiment with Pass-thru video and some of the new Oculus Interaction features.

This demo uses Hand Tracking. Controllers not required! A user wouldn’t have to fumble with unfamiliar buttons if they never used VR before. They can just put on the headset, and move their hands and fingers naturally, grabbing and manipulating objects without having to hold controllers and press buttons they can’t see! Hand Tracking on the Quest was upgraded very recently, and the accuracy is amazing!

Less than 2 days to set up Oculus Integration and grab a handful of free 3D model packs from the Asset Store. I configured the hands and grab interactions. I wrote a few standard C# scripts to handle events and display results.

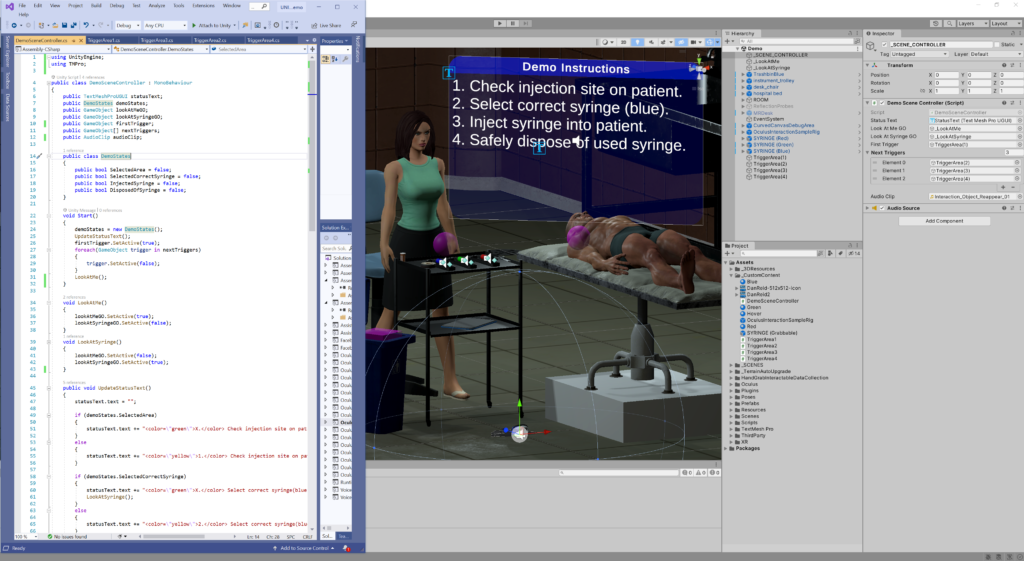

For fun, I made the instructor/by-stander look at the user. After picking up the syringe, her gaze follows it, and then follows the user again after disposing of the syringe. If this were a true production level project, much more time would be spent on polishing it up, there would probably be some communication with an external Learning Management System to log in a “student”, track and score their progress, etc. Perhaps even a multi-player element for a Remote Training class. That said: please view this demo as a simple “what can I put together in a very short amount of time?” prototype… Below is a screenshot of the editor view with a bit of one of the main scripts I wrote for this particular project.

After quickly creating the above demo, I decided to put together another one, this time using VR Interaction Framework. I also grabbed few more free model assets. I wanted to create a quick sample where you would assemble an object from parts (using snapping). This demo was created again in less than 2 days (by which I mean spending a little free time here and there over a couple days). A good portion of the time was spent perusing the Asset Store for the models I wanted to use, and setting up the scene. The following day I configured things and wrote some C# scripts. This next demo utilizes the Quest’s controllers, so buttons are used for gripping objects and moving around (snap turning and smooth walking). Again, very nice results very quickly.

I’m currently putting together a few more demos as time permits. The next video shows an example of an X-Ray interaction. I am using simple graphics for prototyping, though more realistic models would be used for a more production ready demo. I have added some hinge joints to the armature of the X-Ray camera and overhead light for “realism”. I’m also using a separate camera and hidden skeleton to simulate the X-Ray viewer. A polished version would not show the X-Ray in real-time. It would only be visible after triggering the actual X-Ray, on a more realistic display model. The demo is meant to demonstrate how this can be accomplished.

Next up, I spent a day (from scratch) and began a new sample project to sample connecting to Photon Networking (PUN2) to experiment more with multi-user support. This next video represents a working prototype for experimentation. It is already successfully connecting to PUN2, and I used just freely available models to create the scene.

Using VRIF, I quickly put together a simple interaction to control a valve using the steering wheel interaction, and based the depth of the wheel (valve) into the pipe on the wheel’s rotation value to move the game object’s relative Z-position as it turns. This simulates the valve “screw” motion so that as you turn it clockwise, it moves into the pipe (closing the valve) and counter-clockwise moves it out more. That same value is used to modify values in the flame-emitter, so as it closes, it emits less particles so when it reaches “0” the flame is turned off.

Next I could tie in the multi-user elements using PUN2 to pass information from each user and synchronize elements in each user’s scene so effects are visible to each user. Events could be strung together using arrays of “action” classes and a main scene function to trigger/store/log/communicate events based on user interactions (ie., training data – completions/scores/failures/etc.).

Using character rigs and inverse kinematics, I can create the networked view of each user as an avatar, and synchronize them based on their network sync-ed head/hand positions for multi-user visualization. PUN2 also has voice-over-network capabilities, so for instance a hand-held walkie-talky could be implemented – and attached to the user’s “belt” with a “push-to-talk” event to trigger sending audio to all users, and data from each “station” could be synchronized to each user as well as to a “command station” to visually represent the status of each node in a simulated control room. Better models would be used in a more polished project. The potential for almost sand-box-video-game style VR training is absolutely achievable.

Below is a picture of my desk-space. A decent little 3D resin printer, and my collection of Warhammer 40K/Necromunda models that I’ve been acquiring over the years. Simple hobbies but they keep me entertained. 🙂

A little bit about me – I love camping! From humble beginnings camping in a tent, to a small trailer, up to an old monstrous Class-A – and back down again to our comfortable Class-C. That was the sweet spot! After the last couple years of everything being shut down, I can’t wait to be relaxing in the solitude of a quiet campground away from the daily grind. I have to admit, when I’m out camping now I still bring a Quest with me. If the weather doesn’t cooperate, and it’s pouring rain outside – I can sit in the RV, bring up a video in BigScreen VR and feel like I’m in a vast movie theater! Ah technology…

And just for fun… A short video of “Me and JD”, shenanigans with one of my old pals from the old days back in the office (added here because I miss our antics):